We are close to understanding almost everything about position-based dynamics (PBD). While reading these two articles on PBD and its successor XPBD, I quickly realized that there were many concepts completely unknown to me. So, I once again decided to write about them.

I will write this week about the PBD simulation loop, line by line, in order to better understand it all. In the next article, I will write about PBD’s successor, XPBD. At the end, I will choose between PBD and XPBD as a simulation loop to move towards setting up a cloth simulation.

The position-based dynamics (PBD) algorithm

Here is the algorithm to run a simulation with PBD:

Figure 1 : L’algorithme de simulation de PBD - J. Bender, M. Müller and M. Macklin / A Survey on Position Based Dynamics, 2017

The lines (1)-(3) are to initialize the position and the velocity of the particles because the system is of second order with respect to time: . Otherwise, there would be too many unknown variables.

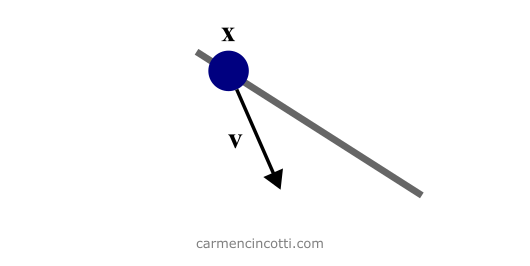

Figure 2 : Initialization of PBD. Particle x is constrained to the line (in gray). It has a velocity of vector v.

Recall that PBD works exclusively and directly on the positions of particles in a system. It is therefore not necessary to maintain the acceleration layer to calculate the velocity and thus the position in the future steps.

The loop

We are now entering the simulation loop! At each iteration, we take a step in time, to advance the simulation.

Lines (5)-(6) (of Figure 1) perform a symplectic Euler integration step to advance position and velocity. The new location, is just a prediction of the final position of the particle not subject to any constraints. We don’t consider constraints until later.

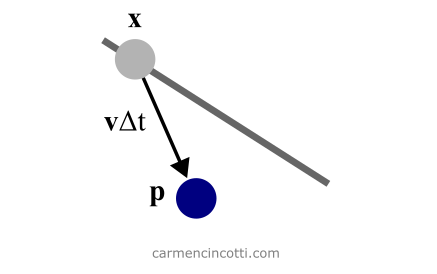

Figure 3: The prediction of the x position of the particle. The prediction is represented by a second temporary variable p.

Symplectic Euler integration

Typical Euler integration advances a system in time , or referred to as here, as follows:

Note that the velocity is updated after updating the position . However, this method increases the potential energy to the system it simulates. To combat this, we use Symplectic Euler integration:

We first determine the velocity of the system in the designated time step, , then use this new velocity to calculate the position.

In line (7), we continue with the generation of non-permanent external constraints such as collision constraints. We need to generate them at this precise moment to perform continuous collision detection with the new predicted position, , from the previous step. I will come back to the subject of collisions in a later article.

The “nonlinear” Gauss—Seidel solver

During rows (8)-(10), a solver iteratively corrects the predicted positions, in order to satisfy the constraints defined in the system.

In the article A Survey on Position Based Dynamics, 2017 - Jan Bender , Matthias Müller and Miles Macklin there is this idea of a non-linear Gauss-Seidel solver, because Gauss-Seidel is usually used to solve linear systems. Recall that the difference between the linear constraints and non-linear is:

-

Linear - Linear inequality constraints have the form . Linear equalities have the form .

-

Nonlinear - Nonlinear inequality constraints have the form , where . Similarly, nonlinear equality constraints also have the form .

The Gauss—Seidel method

The Gauss-Seidel method is used to solve a system of linear equations. Recall that a linear equation takes the form:

Note that this numerical method is iterative. In other words, we use a loop to iteratively approach an approximate solution… which gave me pause. Why do I have to use a numerical method to solve an equation like ? Here is a great resource (slide 2) that answers this question.

Looking at this example of the method in action - we see what is particularly interesting about this method. We substitute the old values for our brand new numerically calculated values during each iteration.

Applying this knowledge regarding the satisfaction of the constraints in the system, we see that each constraint equation will be solved individually and the result of one will immediately get involved in the computation of the other. However, our system has only one constraint to be simpler as an example. Let’s imagine that if there were two that during an iteration, the result of one will influence immediately the result of the others.

In any case, the goal is to solve the constraints like in this image below:

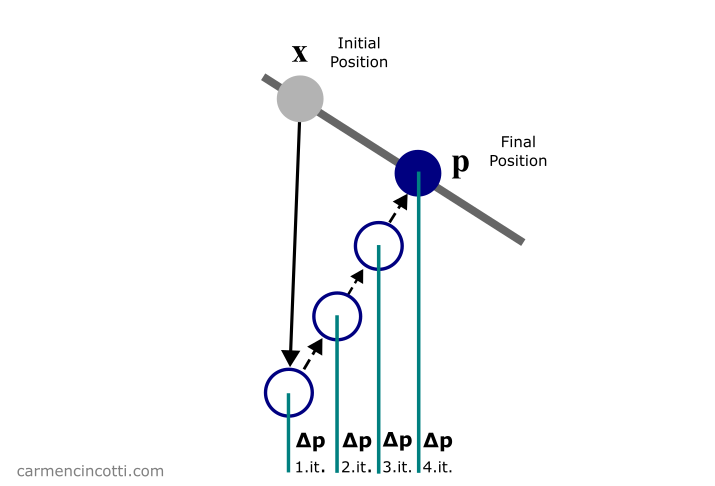

Figure 4: The Gauss—Seidel algorithm in action. The predicted position p is moved to satisfy the constraint (the gray line).

More specifically, we want to iteratively update to satisfy our constraints:

Figure 5: The predicted position p is moved iteratively by to satisfy the constraint (the gray line).

Great! We have a solver ready to help us solve the system 🎉! However, we have a small question that we must answer 🤔:

How do we find ?

Correct the particle position with the Newton—Raphson method

To find the displacement of the particle , we will use a process based on the Newton-Raphson method.

The Newton—Raphson method

The goal of the Newton—Raphson method is to iteratively determine the roots of an arbitrary, nonlinear function. In other words, we minimize this function (assume a function ) by finding the value of which will make .

Let’s take for example this function . We start by assuming that the value will minimize the function. We draw a line tangent to the curve like so:

It is clear that is not minimized by , because . We continue to search for roots by trying again at :

Finally, we repeat this process to finally find the root .

Following the Newton-Raphson method, we want to linearize the constraint functions. In the case of an equality constraint, it is approximated by linearizing it as follows:

To maintain the linear and angular momentum, we restrict to be in the direction of :

where is the masses of the particles (we invert them to weight the corrections proportional to the inverse masses). At first sight, I thought to myself uh, what? However, I created some graphs to at least clarify this equation (even if I am still unclear on the conservation of moments).

Imagine that there is a constrained function . The idea is that we want an arbitrary point to stay on this line regardless of external forces:

We want to minimize . To do this, we travel in the direction of the gradient of , :

If I arbitrarily place a point on this graph and I would like the point to always stay on - it makes sense that I make it travel in the direction of the gradient of to get back to line as fast as possible!

Our next step is to find the value of our Lagrange multiplier (a scalar factor) and then calculate . Rewriting the linear approximation of is as follows:

where

We can perform a substitution to find :

After finally finding and therefore , we update .

Recall that is a temporary positional variable. We update our original position variable in the next step.

Then we go through another iteration of the “nonlinear” Gauss-Seidel solver.

The final update of position and velocity with verlet Integration

During the lines (10)-(12) - after the Gauss-Seidel solver, we update the position and the velocity of each particle using an integration method called Verlet integration:

With Verlet’s integration - instead of storing each particle’s position and velocity, we store its new position and its previous position . As you can see, with them we can calculate the new velocity, !

Then the loop starts again and we start the whole process again! 🎉

Next time

PBD can be extended to provide more visually pleasing simulations by its successor XPBD. We will see that in the next article!

Resources

- Non-linear Gauss-Seidel solver - Real-Time Physics Simulation Forum

- Advanced Character Physics - Thomas Jakobsen

- Efficient Simulation of Inextensible Cloth - Rony Goldenthal, David Harmon, Raanan Fattal, Michel Bercovier, Eitan Grinspun

- A Survey on Position Based Dynamics, 2017 - Jan Bender , Matthias Müller and Miles Macklin

- Position-based simulation of elastic models on the gpu with energy aware gauss-seidel algorithm - Ozan Cetinaslan

- Simulation of Mooring Lines Based on Position-Based Dynamics Method - Xiaobin Jiang, Hongxiang Ren, Xin He

- Iterative methods for Ax = b - www.robots.ox.ac.uk